.

Bootstrapped the federated data governance by introducing a strategy and optimizing below capabilities to reinvigorate the 50+ constituent DG programs across Divisions, global regions, and corporate functions.

.

Global Data Governance Management:

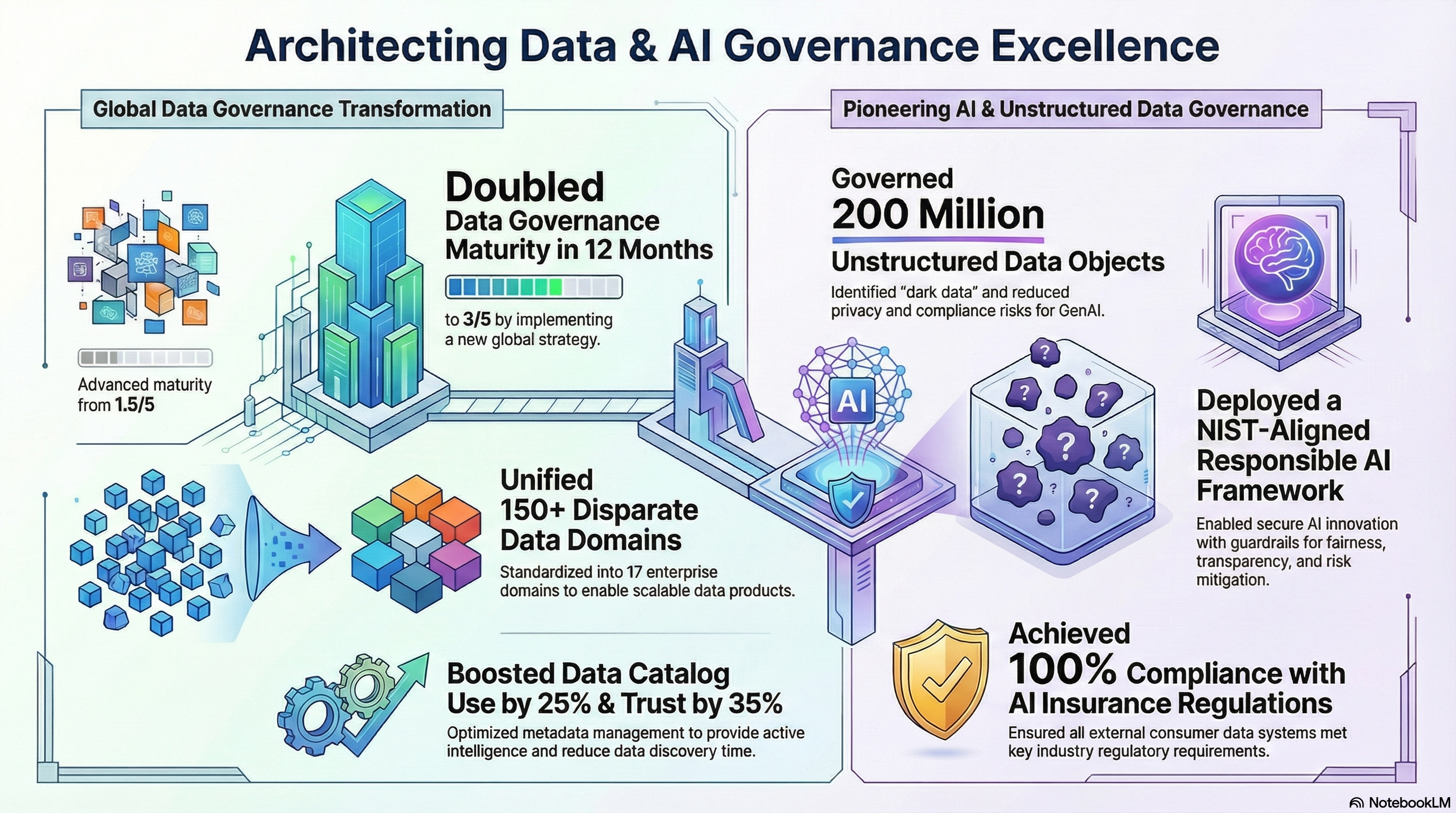

* Data Strategy & Maturity: Assessed the maturity of federated data governance at 1.5/5. So, formulated the first global MDGO strategy, comprising of an offensive data enablement for business value (data products) balanced with a defensive data risk management (policies & controls). The strategy was socialized with data VPs and adopted by peer Directors & Stewards to double the maturity to 3/5 in 12 months.

* Global Data Domains (GDD) and Data Products: Resolved misalignment of 150+ disparate global data domains by standing-up 17 standardized enterprise GDDs (ex: Claims), addressing the core issue of non-conforming CDEs across 50+ global DG programs, resulting in accurate executive reporting. This enabled the launch of scalable and reusable business-domain-oriented (ex: Dental) data products in a mesh architecture, accelerating time-to-insight for analytics teams.

* Metadata Management: Increased data catalog utilization by 25% (US metric) by optimizing metadata management, shifting from passive to active ingestion, generating relevant insights, and with role-based data literacy. This provided active data intelligence, reduced data discovery time for analysts and increased trust in enterprise data assets by 35%.

* Data Quality: Transitioned DQ management from Data Engineering to MDGO for stewardship. Formalized the entire DQ practice with: DQ standards (Rules, Dimensions), DQ architecture (DQ pipelines, (introduced) Data Contracts, DQ reporting), DQ practices (profile, cleanse, enrich, validate, (introduced) observe), (revitalized) DQ issue management (root cause analysis, remediation, escalation, and reporting), and (towards automation of) DQ operations (Rule design, execution, results, (published) dashboards).

.

Defensive Data Risk Management:

* Data Security: Aligned data security classification, labeling, and tagging of sensitive data between structured/unstructured DG and DSPM tools resulting in uniform data discovery and security posture (DP/DLP, DSPM/CSPM). Explored policy-based access controls (DAG/PAM) for non-human (AI) and persona/risk-based access, coupled with usage analytics for continuous optimization.

* Data Policies and Standards: Created awareness of the need for data policies independent of the existing Records Management policies. Initiated monitoring of DG standards adoption resulting in escalation of any non-compliance by global DG programs.

* Data Risk and Compliance: Established data risk as a category with Non-Financial Risk Committee framework. Performed process-risk-control analysis of DG operations to establish 100+ DG controls with data and role Accountabilities for data risk management. Introduced DG checklist as a preliminary for inherent-risk evaluation of third-party acquisitions or integrations ensuring governance and MetLife policy compliance.

* ESG-CSRD Data Governance: Governed the data for the Environmental, Social, and Governance – Corporate Sustainability Reporting Directive with the rigor of a regulatory data domain for accuracy, integrity, timeliness and reputational risk mitigation.

.

AI and Model Governance:

* Model Data governance: Addressed the gap of governed data supply chains for AI/ML models, simultaneous to the existing model risk management, improving the accuracy of actionable insights for decision intelligence.

* Unstructured Data Governance: Procured and implemented unstructured data governance Ohalo Data X-ray, to classify and tag approximately 200 million objects globally, identify dark data in business domains, and reduce privacy and compliance risk, especially for GenAI. Also leveraged the tool’s GenAI capability to provide novel content intelligence, and data unification and contextualization with knowledge graphs.

* AI Governance: Enabled the NIST AI RMF aligned AI Governance policy. This framework enabled Responsible AI innovation with guardrails, to ensure fairness, transparency, explainability for equitable use, while mitigating risks. Implemented RU-AI insurance industry regulations from CO Dept of Insurance for 100% compliance of external consumer data and information systems (ECDIS) usage in underwriting.

* GenAI enabled Legacy Modernization: Guided reverse-engineering of legacy systems with Intellisys to extract operational logic, and inherent business/data rules and decision tables for RAG enabling GenAI use cases like chatbots.

Data Governance Assessment was performed by interviewing all the data VPs and directors and sampling the DG processes.

Offensive Data Enablement strategy is focused on

* Global data domains (operational) like Claims data domain are aligned to core business capabilities like Claims management and standardized as a model for the constituent DG programs to align to.

* Product-oriented (ex: dental) data products are provisioned in a data-mesh architecture from these standardized data domains for enterprise-wide consumption needs.

* Stewardship of these business-aligned data domains and respective product-oriented data products is paramount optimal data asset management and utilization value (usage analytics).

* This strategy is actively supported by the BU leaders represented through the Data Governance Council.

Defensive Data Risk management

* Began with establishing Data risk as a category under Non-financial risk framework.

* Further all data governance processes were anlayzed to identify risk and establish appropriate risk controls (Process-Risk-Control framework).

* This effort was performed with the oversight of Operational Risk and Internal Audit leaders.

* The Risk committee, which is represented by leaders of all Governance, Risk, Compliance, Privacy, Legal, Architecture, Info-sec functions, has evolved as the policy-making working body to shape the Policies & Standards to present to DGC for ratification.

Metadata management

* A new 5-tier, 15-component framework was introduced to address all aspects of metadata lifecycle from ingestion to provision.

* Metadata category level accountability is strongly advocated during the curation stage, prior to ingestion into the catalog, ensuring meaningfully accurate & complete metadata.

* Data policies, applicable regulations and data quality rules & thresholds are connected to CDEs in the catalog for better understanding of the business terms, related processes, and compliance needs.

* Repurposed metadata-based metrics visualization for specific end-users with actionable intent.

* Active data intelligence is provisioned for most relevant questions directly from the catalog.

Unstructured data governance is introduced

* To manage all content (docs, pdfs, ppts, architecture diagrams etc.) across all SharePoint and shared drives.

* To scan for sensitive data elements and label them appropriately to apply compliance processes.

* ML based regular-expression patterns are synchronized across structured, unstructured, and info-sec tools for uniformity.

* Sanitized data is allowed by the divisional data leaders to be ingested into LLMs/Agents for chunking, summarization to support a variety of GenAI use cases.

Legacy Modernization – a new GenAI based approach is being explored

* Reverse-engineered all operational logic out of legacy systems.

* AI tools are being explored to extract rules and decision tables out of the extracted logic.

* RAG knowledge-base is being built based on the identified rules & decision patterns to support a variety of use cases like cahtbot etc.

* Upon further maturing of the RAG knowledge-base, the new legacy replacement systems can be developed using vibe-coding.